When to Use Leveled Compaction in Cassandra

The Leveled Compaction Strategy was introduced in Cassandra 1.0 to address shortcomings of the size-tiered compaction strategy for some use cases. Unfortunately, it's not always clear which strategy to choose. This post will provide some guidance for choosing one compaction strategy over the other.

The Difference Between Size-Tiered and Leveled Compaction

Leveled compaction has one basic trait that you can use to judge whether it's a good fit or not: it spends more I/O on compaction in order to guarantee how many SSTables a row may be spread across. With size-tiered compaction, you get no such guarantee, though the maximum number of SSTables a row may be spread across tends to hover around 10.

When using the size-tiered compaction strategy, if you update a row frequently, it may be spread across many SSTables:

Gray blocks are SSTables; blue lines denote fragments of a single row

When this row is read, Cassandra needs to fetch a fragment of the row from almost every SSTable. This can potentially be expensive in terms of the number of disk seeks that need to be performed.

When you look at the picture for leveled compaction, things are quite different:

<

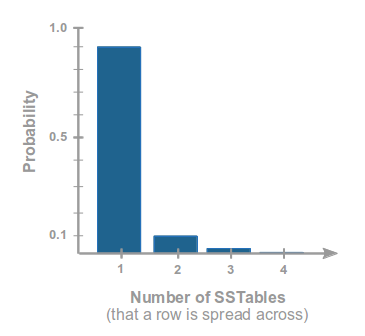

With leveled compaction, if you read a row, you only need to read from a single SSTable 90% of the time. In fact, if we let N be the number of SSTables you must read from to complete a row read, the probability of having to read from N different SSTables decreases exponentially as N increases, which gives us a probability distribution that looks like this:

Probability distribution for the number of SSTables holding fragments of a single row

If you do a bit of math, you'll see that the expected number of SSTables that must be read when reading a random row is ~1.111. This is much lower than the number of SSTables that must be read with size-tiered compaction. Of course, if you stop updating the row, then size-tiered compaction will eventually merge the row fragments into a single SSTable. But, as long as you continue to update the row, you will continue to spread it across multiple SSTables under size-tiered compaction. With leveled compaction, even if you continue to update the row, the number of SSTables the row is spread across stays very low.

When Leveled Compaction is a Good Option

High Sensitivity to Read Latency

In addition to lowering read latencies in general, leveled compaction lowers the amount of variability in read latencies. If your application has strict latency requirements for the 99th percentile, leveled compaction may be the only reliable way to meet those requirements, because it gives you a known upper bound on the number of SSTables that must be read.

High Read/Write Ratio

If you perform at least twice as many reads as you do writes, leveled compaction may actually save you disk I/O, despite consuming more I/O for compaction. This is especially true if your reads are fairly random and don't focus on a single, hot dataset.

Rows Are Frequently Updated

Whether you're dealing with skinny rows where columns are overwritten frequently (like a "last access" timestamp in a Users column family) or wide rows where new columns are constantly added, when you update a row with size-tired compaction, it will be spread across multiple SSTables. Leveled compaction, on the other hand, keeps the number of SSTables that the row is spread across very low, even with frequent row updates.

If you're using wide rows, switching to a new row periodically can alleviate some of the pain of size-tiered compaction, but not to the extent that leveled compaction can. If you're using short rows, Cassandra 1.1 does have a new optimization for size-tiered compaction that helps to merge fragmented rows more quickly (though it does not reclaim the disk space used by those fragments).

Deletions or TTLed Columns in Wide Rows

If you maintain event timelines in wide rows and set TTLs on the columns in order to limit the timeline to a window of recent time, those columns will be replaced by tombstones when they expire. Similarly, if you use a wide row as a work queue, deleting columns after they have been processed, you will wind up with a lot of tombstones in each row.

Column tombstones are just like normal columns in terms of how many SSTables need to be read. So, even if there aren't many live columns still in a row, there may be tombstones for that row spread across extra SSTables; these too need to be read by Cassandra, potentially requiring additional disk seeks. This problem is most visible when deletions or expirations happen on the same end of the row that you start your slice from.

It's worth noting that Cassandra 1.2 will attempt to purge tombstones more frequently under size-tiered compaction, lessening the impact of this issue.

When Leveled Compaction may not be a Good Option

Your Disks Can't Handle the Compaction I/O

If your cluster is already pressed for I/O, switching to leveled compaction will almost certainly only worsen the problem. This is the primary downside to leveled compaction and the main problem you should check for in advance; see the notes below about write sampling.

Write-heavy Workloads

It may be difficult for leveled compaction to keep up with write-heavy workloads, and because reads are infrequent, there is little benefit to the extra compaction I/O.

Rows Are Write-Once

If your rows are always written entirely at once and are never updated, they will naturally always be contained by a single SSTable when using size-tiered compaction. Thus, there's really nothing to gain from leveled compaction.

Additional Advice on Leveled Compaction

Write Sampling

It can be difficult to know ahead of time if your nodes will be able to keep up with compaction after switching to the leveled compaction strategy. The only way to be sure is to test empirically, and the best way to do that is with live traffic sampling. If you're already in production, I highly recommend trying this before you flip the switch on your cluster.

SSDs and Datasets That Fit in Memory

Though size-tiered compaction works quite well with SSDs, using leveled compaction in combination with SSDs can allow for very low-latency, high-throughput reads. Similarly, if your entire dataset fits into memory, size-tiered compaction may work very well, but leveled compaction can provide an extra boost. Besides reducing disk I/O during reads, leveled compaction lowers CPU consumption and reads from memory. Merging row fragments consumes CPU, so the fewer SSTables a row is split across, the lower the CPU consumption. Bloom filter checks, which have a CPU and memory access cost, are also reduced by leveled compaction.

Lower Disk Space Requirements

Size-tiered compaction requires at least as much free disk space for compaction as the size of the largest column family. Leveled compaction needs much less space for compaction, only 10 * sstable_size_in_mb. However, even if you're using leveled compaction, you should leave much more free disk space available than this to accommodate streaming, repair, and snapshots, which can easily use 10GB or more of disk space. Furthermore, disk performance tends to decline after 80 to 90% of the disk space is used, so don't push the boundaries.

In addition to lowering the amount of free disk space needed, leveled compaction also consumes less disk space overall, because it frequently merges overwrites and other redundant information.