LangChain and Astra DB: A Rock-Solid Foundation for Building Generative AI Applications

Building a generative AI app requires a rock-solid database. But building on that stable foundation can be a complex and laborious process. That’s exactly why we support LangChain, a framework that simplifies building GenAI applications.

Integrated with the highly scalable Apache Cassandra® and DataStax Astra DB, LangChain enables developers to work with a GenAI-centric framework and enjoy all the advantages of a vector database, without the need to code to the API of a particular data store.

GenAI apps and agents need speed and scale. Consider a browser plugin that is constantly writing vectors of what’s been browsed, and constantly reading vectors to discover related topics. You’ll need a vector database that can ingest and index data in real time from a global user base, support synchronous operations, and deliver low-latency responses.

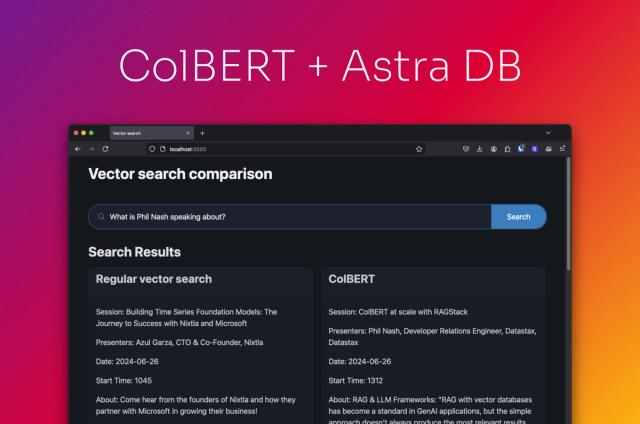

The Cassandra community spent four years building a new indexing capability for Cassandra that now supports our vector implementation. While most vendors are still using the HNSW libraries from Lucene, we knew that to support high-growth use cases we’d have to deliver something more robust that adds parallelism and real-time indexing alongside other significant performance improvements. We introduced JVector to address those needs, and deliver a monumental leap in vector search performance. JVector implements state-of-the-art algorithms inspired by DiskANN and related research and takes full advantage of JVM SIMD acceleration.

With the construction of a solid foundation complete, we just needed that LangChain house atop it.

LangChain is a framework for developing applications powered by large language models (LLMs), and is specifically designed for GenAI applications that require:

- An interface between apps and LLMs

- An interface between data and the tools that prepare and store that data for the application

- Composability, in that it can generate and integrate with AI agents that interact with the environment and ecosystem.

The building blocks LangChain delivers to developers are: “components,” which are abstractions between apps and language models; and “chains,” which assemble the components needed for specific use cases, and are also designed to be customizable

LangChain provides a plugin architecture to add vector stores. We have contributed a connector to Cassandra and added it to Astra DB so that LangChain developers can easily and naturally add Cassandra or Astra DB as a vector store in new and existing retrieval augmented generation (RAG) applications. In a RAG application, a model is provided with additional data or context from other sources—most often a database that can store vectors.

If you're building a high-growth generative AI app or already store your data in Cassandra, you'll require a powerful Python library to abstract the process of accessing Cassandra; we developed CassIO to help with this (check out this notebook for a code-centric quickstart).

The enterprises we work with are already using this design pattern to deliver chatbots, recommendation systems, and more. We can’t wait to see what you create.

You can get started with a code example straight away and, as always, reach out through the chat function on astra.datastax.com if you have questions.

Join us on October 26 at 9am PT for a live webinar where LangChain founder and CEO Harrison Chase and SkyPoint founder and CEO Tisson Mathew discuss their experience building production RAG apps that use customer data with LangChain, LLMs, and vector search.