Bridge the Demo-to-Production Gap: Vector Search Is Generally Available!

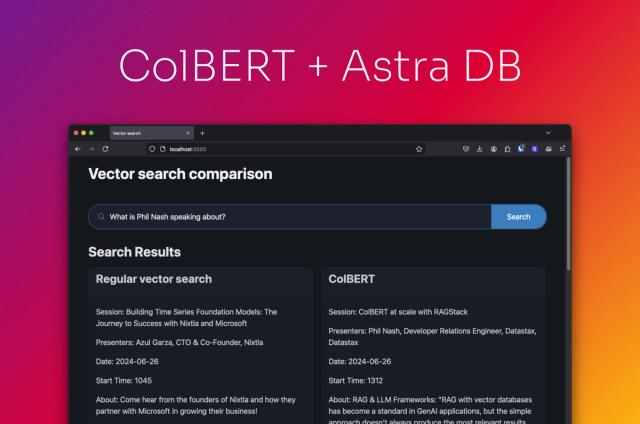

Almost every enterprise has some sort of generative AI project underway, but only a handful of these actually bridge the “demo-to-production gap” and become applications that drive real business impact. Vector search is a cutting-edge approach to searching and retrieving data—and a critical component to delivering game-changing generative AI applications.

Today, we’re excited to announce the general availability of vector search in DataStax Astra DB, our database-as-a-service (DBaaS) built on the open source Apache Cassandra® – to help close the demo-to-production gap with massive scalability and enterprise-grade features.

A database that supports vector search can store data as “vector embeddings,” a key to delivering generative AI applications like those built on GPT-4. With availability on Microsoft Azure, Amazon Web Services (AWS), and Google Cloud, businesses can now use Astra DB as a vector database to power their AI initiatives on any cloud, with best-in-class performance that leverages the speed and limitless scale of Cassandra.

Here’s a quick walkthrough of some of the most important advantages of vector search in Astra DB.

Reducing AI hallucinations

Large language models, or LLMs, often struggle to recognize the boundaries of their own knowledge, a shortfall that often leads them to “hallucinate” to fill in the gaps. In many use cases, like customer service chat agents where the customer might already be unhappy, hallucinations can damage a business’s reputation—and revenue. Retrieval Augmented Generation (RAG), which is included in this vector search release, can eliminate most hallucinations by grounding the answers in enterprise data (Check out our new whitepaper, “Vector Search for Generative AI Apps,” for a deep dive).

Ensuring data security

Many AI use cases involve personally identifiable information (PII), payment information, and medical data. As part of the vector search GA announcement, we’re proud to announce Astra DB is PCI, SOC2, and HIPAA enabled. Skypoint Cloud Inc., which offers a data management platform for the senior living healthcare industry, aims to implement Astra DB as a vector database to ensure seamless access to resident health data and administrative insights.

“Envision it as a ChatGPT equivalent for senior living enterprise data, maintaining full HIPAA compliance, and significantly improving healthcare for the elderly,” said Skypoint CEO Tisson Mathew.

Low latency and high throughput

In real-world, production workloads for vector search, a database has to support high throughput reads and writes for reasons that aren’t necessarily present in other predictive analytics use cases:

- Enterprise data, whether it’s a doctor’s medical notes or airline flight booking information, is updated constantly. This means low-latency reads and writes are critical for the database.

- Chat history needs to be persisted and retrieved in real-time; vector stores are often used to accomplish this. In the RAG model, each query to the vector database for the user’s chat history results in one LLM call, which is persisted back into the chat history. This means enterprises must plan for a near 1:1 ratio between vector store queries and writes, which implies both low latency read and writes and high throughput for reads and writes are necessary. In terms of latency targets, serving vector search results in the low 10s of milliseconds, and writing data to a vector store in the low 10s of milliseconds is required to meet customer’s needs.

- Advanced RAG techniques require more stringent latency requirements; Chain-of-Thought, ReAct, and FLARE require multiple queries to both LLMs and the vector database. Each LLM invocation also needs to be stored in memory to be used in subsequent LLM and prompt construction calls. This increases the need for fast reads and writes to a vector store.

Astra DB satisfies these stringent latency and throughput requirements via its architecture; it’s designed such that the queries and writes perform at roughly the same latency. Under realistic read and write conditions, our tests showed one to two orders of magnitude better throughput and lower latency than other vector search databases (we expect to publish these performance test results soon).

Autoscaling

Asta DB supports autoscaling, ensuring that an AI application’s low-latency requirements for reads and writes are maintained even with increased workloads.

“We have very tight SLAs for our chatbot and our algorithms require multiple round trip calls between the large language model and vector database. Initially, we were unable to meet our SLAs with our other vector stores, but then found we were able to meet our latency requirements using Astra DB.” said Skypoint’s Mathew.

Multi-cloud, on-prem or open source

Most companies that want to deploy at scale run multi-cloud systems to reduce costs, leverage the technical advantages of each cloud provider, and use their own datacenter. DataStax is happy to make vector search generally available across GCP, Azure, and AWS to enable companies to deploy generative AI into all their applications. DataStax provides the same level of enterprise support for vector search across all the major clouds.

Because of the infrastructure costs associated with running generative AI in the cloud, and the lack of availability of graphics processing units (GPUs) in the cloud, some companies are buying fleets of GPUs to deploy within their own data centers. For these customers, DataStax plans to soon release a developer preview of vector search on DataStax Enterprise (DSE), for companies that want to self-manage their own Cassandra clusters. Vector search is also an accepted CEP (CEP-30) to be included in the upcoming open source Cassandra v5.

Data integrations

Vector search is only as good as the data it queries. There are already dozens of vector search integrations available for Cassandra. With the vector search GA, we are releasing an Azure PowerQuery integration and GCP BigQuery integrations. These integrations are bi-directional: information sent from data lakes such as Azure Fabric and BigQuery to Astra DB can be used as additional context to improve the responses of LLMs. Chat data sent from Astra DB to a data warehouse enables developers to build monitoring systems and business intelligence reports on their AI agents.

A growing ecosystem of development help

Business units looking to deploy the newest AI solutions are often held hostage to a lack of data scientists. Generative AI is no exception, but today, developer tools like Langchain have taken the generative AI community by storm. Because of its simple APIs and wide range of integration with different LLMs and datasources, what would have taken months to build now takes a few days.

CassIO, a library purpose-built to create data abstractions on top of Cassandra, is now officially integrated into Langchain. Advanced features such as Maximal Marginal Relevance drastically improves vector search relevance, a feature that‘s only supported by a handful of vector search databases. Features such as Cassandra prompt templating makes it easy to construct sophisticated prompts to improve LLM answer relevance. VectorStore Memory makes it easy to integrate chat history that spans the entire lifetime of a customer interacting with an LLM. These advanced features are now available via a single line of configuration.

Bridge your demo-to-production gap

Getting your generative AI application to production is hard, and we are confident that Astra DB with vector search can help. Sign up here.