Build LLM-Powered Applications Faster with LangChain Templates and Astra DB

Today, we’re excited to participate as a launch partner for LangChain Templates, which are easily deployable reference architectures that anyone can use. With DataStax Astra DB and LangChain Templates, it’s easier than ever to build and deploy generative AI applications with Astra DB.

LangChain Templates simplify the process of deploying applications that use large language models (LLM) as a RESTful web service. This allows us (as developers) to quickly test out how our application interacts with its model.

We have already seen that building an LLM-powered application can be drastically improved by using LangChain, which abstracts a lot of the more complicated aspects of working with LLMs. The individual components needed for specific use cases are then assembled together in structures called “chains.” These are essentially a list of steps for our application and LLM to do.

This process has become even easier with LangChain Templates. They enable us to use pre-built chains based on popular LLM use cases. With a LangChain Template, we can be up and running with a simple application that can help us to explore the possibilities of what our LLM application can do.

Note: A list of available templates can be found in this GitHub repository.

Another advantage of using LangChain Templates is that LangChain offers an integration with Astra DB as a vector store. This greatly simplifies the process of generating and storing the vector embeddings that drive our application.

So why should we use Astra DB for vector search?

Ever try to add new data to a vector database, and waited multiple seconds (sometimes even minutes) for it to finish indexing before being able to query that same data? Astra DB makes all data, including vectors, available immediately, with zero delay.

This is because Astra DB is a Database-as-a-Service (DBaaS) built on Apache Cassandra®. Cassandra gives Astra DB the ability to update indexes concurrently while serving data at petabyte-scale, with replication over a wide geographic area, supporting extremely high availability. When scale and performance matter, DataStax Astra DB is the vector database of choice.

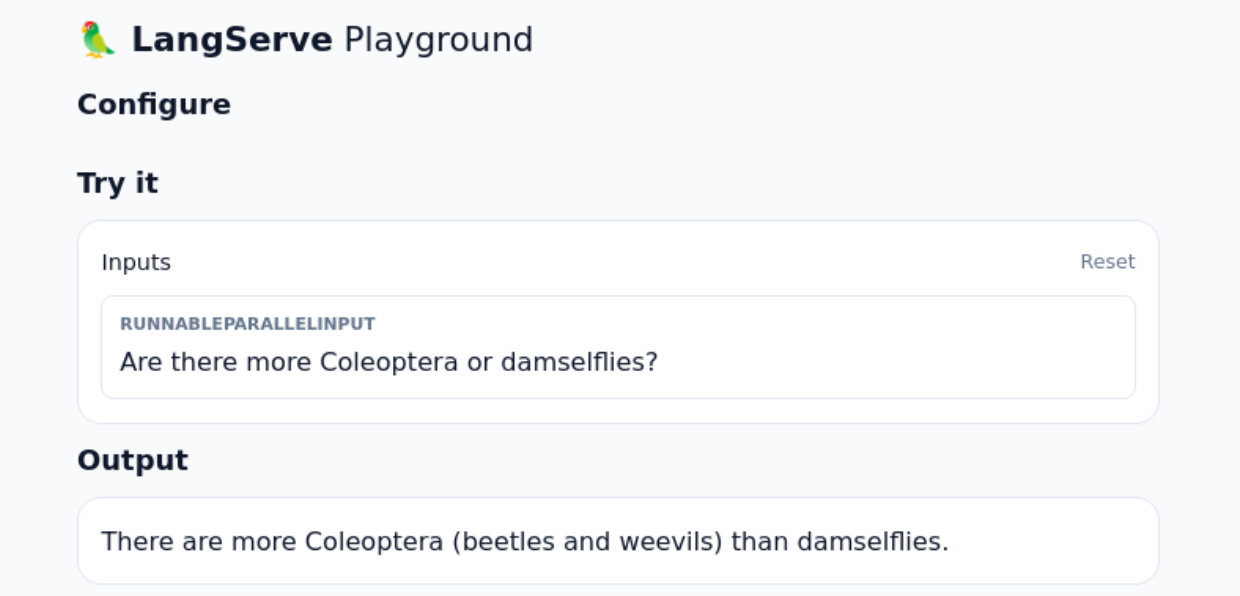

Example: Cassandra Entomology RAG

For those of you who wish to try this out, here’s a quick Python example using the cassandra-entomology-rag template. This will build a sample application that can act as a chatbot with a knowledge context on the study of insects.

Before proceeding with this example, you should have the following:

- An OpenAI account and API key.

- An Astra DB account, vector database, and token.

- LangChain CLI installed.

To install the LangChain CLI, execute this command:

pip install -U "langchain-cli[serve]"

Begin by creating a new application in your working directory:

langchain app new cassandraEntomologyRAG --package cassandra-entomology-rag

You will be asked if you want to:

- Install the

cassandra-entomology-ragpackage. - Generate route code for the package.

Say “Yes” to both of those prompts. The latter prompt will produce two lines of code:

from cassandra_entomology_rag import chain as cassandra_entomology_rag_chain add_routes(app, cassandra_entomology_rag_chain, path="/cassandra-entomology-rag")

From within the cassandraEntomologyRAG/ directory, edit the app/server.py file, and add the “from cassandra_entomology_rag“ line as the last import. Take the “add_routes(app, cassandra_entomology_rag_chain“ line and replace the default “add_routes(app, NotImplemented)“ line that is present in the file. The server.py file should look like this when finished:

from fastapi import FastAPI

from langserve import add_routes

from cassandra_entomology_rag import chain as cassandra_entomology_rag_chain

app = FastAPI()

add_routes(app, cassandra_entomology_rag_chain, path="/cassandra-entomology-rag")

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="0.0.0.0", port=8000)Make sure you set the necessary environment variables; for example:

export OPENAI_API_KEY="xy-..." export ASTRA_DB_ID="01234567-..." export ASTRA_DB_APPLICATION_TOKEN="AstraCS:xY......" export ASTRA_DB_KEYSPACE="default_keyspace"

Start the application from within the cassandraEntomologyRAG/ directory:

langchain serve

With that running, open up a new browser window with the following URI:

http://127.0.0.1:8000/cassandra-entomology-rag/playground/

In summary, LangChain Templates offer a collection of LLM reference architectures that can be quickly deployed and are easy to use. If you’re building a LLM-powered application, the combination of a LangChain Template and Astra DB’s integration with LangChain can supercharge your development process.

For more information, have a look at LangChain’s Quick Start for LangChain Templates. Also, be sure to check out Astra DB and register for free to get started with the most production-ready vector database on the market.