How Fog Computing Powers AI, IoT, and 5G

It wasn’t enough to have “the cloud,” but now we have fog?

What’s next—hail?

The term may sound a little odd and conjure up images of distant lighthouses in faraway lands, but “fog computing”—a term created by Cisco in 2012—is actually a “thing” and is becoming more and more of a thing every day as the Internet of Things (IoT) grows.

451 Research predicts that fog computing has the potential to reach more than $18 billion worldwide by 2022, and Statista predicts the number of connected devices worldwide to grow to over 75 billion by 2025.

That’s a huge market, so obviously we are talking about something important.

But first—let’s make sure we’re clear on what “fog computing” is.

What is fog computing?

Also referred to as “fogging”, fog computing essentially means extending computing to the edge of an enterprise’s network rather than hosting and working from a centralized cloud. This facilitates the local processing of data in smart devices and smooths the operation of compute, storage, and networking services between end devices and cloud computing data centers.

Fog computing supports IoT, 5G, artificial intelligence (AI), and other applications that require ultra-low latency, high network bandwidth, resource constraints, and added security.

Are fog computing and edge computing the same thing?

No. Fog computing always uses edge computing, but not the other way around. Fog is a system-level architecture, providing tools for distributing, orchestrating, managing, and securing resources and services across networks and between devices that reside at the edge.

Edge computing architectures place servers, applications, or small clouds at the edge. Fog computing has a hierarchical and flat architecture with several layers forming a network, while edge computing relies on separate nodes that do not form a network.

Fog computing has extensive peer-to-peer interconnect capability between nodes, where each edge runs its nodes in silos, requiring data transport back through the cloud for peer-to-peer traffic. Finally, fog computing is inclusive of cloud, while edge computing excludes the cloud.

Why is fog computing needed?

The major cloud platforms rarely experience downtime, but it still happens every now and again; and when it does, it spells big problems and big losses.

In May 2018, for example, AWS was knocked offline for 30 minutes. That might not seem like a lot of time. But let’s say you’re a large enterprise with 50,000 employees. If none of them are able to access the data they need to do their jobs for 30 minutes, that’s 25,000 hours—or 3,125 eight-hour work days—the enterprise loses.

Similarly, in November 2018, Microsoft Azure was down—not just once, but twice—due to multi-factor authentication issues affecting a variety of customers’ applications.

Latency, security and privacy, unreliability, etc.,—all of the above are genuine reasons for concern as the number of connected devices increases and puts overwhelming stress on the big cloud providers’ networks. Real-time processing, a priority for IoT, also becomes very difficult. Without question, these difficulties have led to the Internet of Things requiring a new platform other than the cloud to function optimally—a new infrastructure that can handle all IoT transactions without any unnecessary risks.

Fog computing has emerged as the solution.

How does fog computing work?

Depending on your background, fog computing is probably not as complicated as it may sound.

Developers either port or write IoT applications for fog nodes at the network edge. The fog nodes closest to the network edge ingest the data from IoT devices. Then—and this is crucial—the fog IoT application directs different types of data to the optimal place for analysis.

- For most cases, time-sensitive data is analyzed on the fog node closest to the things generating the data.

- A centralized aggregation cluster/node is used for analysis of data that can wait seconds or minutes and then an action is performed.

- For historical analysis and storage purpose, data that is less time sensitive is sent to the cloud. An example here would be each of the fog nodes sending periodic summaries of data to the cloud for historical or big data analysis.

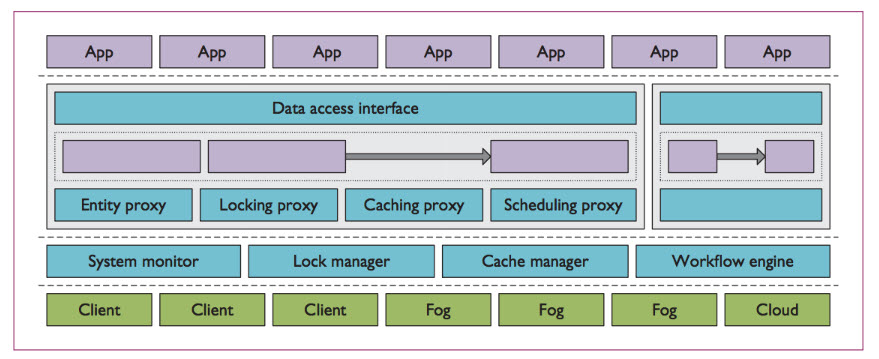

Figure 1. WM-FOG software stack. The top layer is the application layer, where user applications reside. The next layer is the workflow layer, where workflow instances reside. Under the workflow layer is the system layer, where the system components reside. The bottom layer is the entity layer, where the system entities (client devices, fog nodes, and the cloud) reside. Reference: http://www.cs.wm.edu

Figure 1. WM-FOG software stack. The top layer is the application layer, where user applications reside. The next layer is the workflow layer, where workflow instances reside. Under the workflow layer is the system layer, where the system components reside. The bottom layer is the entity layer, where the system entities (client devices, fog nodes, and the cloud) reside. Reference: http://www.cs.wm.edu

How does the fog interact with the cloud?

The fog and cloud do a dance that allows each to perform optimally for the IoT use case. The main difference between fog computing and cloud computing is that cloud is a centralized system, while fog is a distributed decentralized infrastructure.

Fog nodes:

- Receive feeds from IoT devices using any protocol, in real time.

- Run IoT-enabled applications for real-time control and analytics, with millisecond response time.

- Provide transient storage, often 1–2 hours.

- Send periodic data summaries to the cloud.

The cloud platform:

- Receives and aggregates data summaries from many fog nodes.

- Performs analysis on the IoT data and data from other sources to gain business insight.

- Can send new application rules to the fog nodes based on these insights.

When do I need to consider fog computing?

As already stated, fog computing has become an essential aspect of IoT, but it’s not necessarily a requirement for all enterprises.

Here’s a short list of how to determine if you need it for your business.

If you have:

- Data collected at the extreme edge: vehicles, ships, factory floors, roadways, railways, etc.;

- Thousands or millions of things across a large geographic area are generating data;

- The requirement to analyze and act on this data in less than a second;

Then you probably need fog computing.

The benefits of fog computing

Extending the cloud closer to the things that generate and act on data benefits the business in the following ways:

- Greater business agility: With the right tools, developers can quickly develop fog applications and deploy them where needed. Machine manufacturers can offer Machine-as-a-Service to their customers. Fog applications program the machine to operate in the way each customer needs.

- Better security: Protect your fog nodes using the same policy, controls, and procedures you use in other parts of your IT environment. Use the same physical security and cybersecurity solutions.

- Deeper insights, with privacy control: Analyze sensitive data locally instead of sending it to the cloud for analysis. Your IT team can monitor and control the devices that collect, analyze, and store data.

- Lower operating expenses: Conserve network bandwidth by processing selected data locally instead of sending it to the cloud for analysis.

Reference: Advancing Consumer-Centric Fog Computing Architectures

How does DataStax help?

DataStax Enterprise Advanced Replication takes a very straightforward approach to solving these challenges. It allows many edge clusters—each acting independently and sized, configured, and deployed appropriately for each edge location—to send data to a central hub cluster.

Data flowing from an edge cluster can be prioritized to ensure the most important data is sent before the less important data. Inconsistent data connections are fully accommodated at the edge with a “store and forward” approach that will save mutations until connectivity is restored. Any type of workload is supported at both the edge and the hub, allowing for advanced search and analytics use cases at remote and central locations.

With DSE Advanced Replication, clusters at the edge of the enterprise can replicate data to a central hub to enable both regional and global views of enterprise data. Being fully aware of the operational challenges, such as limited or intermittent connectivity, DSE Advanced Replication was designed from the ground up to tolerate real-world failure modes.

Read more about DataStax Advanced Replication here and how it provides complete data autonomy.