Geospatial search and Spark in Datastax Enterprise

In this post I will discuss using geospatial search in DataStax Enterprise (DSE) Search and with Apache Spark™ as part of DSE Analytics. I will also provide a demo project that you can download and try.

Note: This blog post was written targeting DSE 4.8. Please refer to the DataStax documentation for your specific version of DSE if different.

No ETL

Most search tools like Apache Solr™ or Elasticsearch have a geospatial search feature which allows users to ask questions like 'give me all locations within 1 km of given co-ordinates'. This is a requirement we see increasing especially with mobile applications. In most cases, this will require you to ETL (extract, transform and load) the data from the main database to a specific search tool. There are a lot of disadvantages especially the fact that we now need to keep these two sources in sync. DSE allows the user to have one version of your data that you use for both realtime access and also for specialized search queries.

DSE SearchAnalytics

DSE allows you to create a node with 3 complementary features:

- An Apache Cassandra™ node for storing realtime transactional data

- A Solr web application for all search and geospatial queries on the realtime data in Cassandra

- A Spark Worker to allow for analytics queries based on both the realtime data in Cassandra and the indexes provided by Solr.

One set of data is used in many ways to provide multiple features. I personally have been part of projects where the main dataset is held in a relational database and ETL'ed to a specialized search tool and also to an Apache Hadoop™ cluster. Well that time has passed.

Example

The following example is at my github website. The example describes how to load all post codes in the UK with a longitude and latitude of their location.

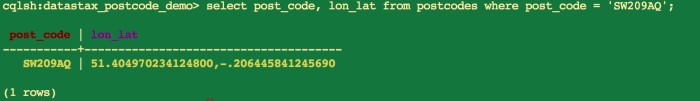

We can see our data by using 'select post_code, lon_lat from postcodes';

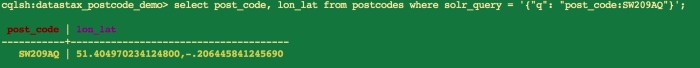

We can also query our data using a solr query like this:

select post_code, lon_lat from postcodes where solr_query = '{"q": "post_code:SW209AQ"}';

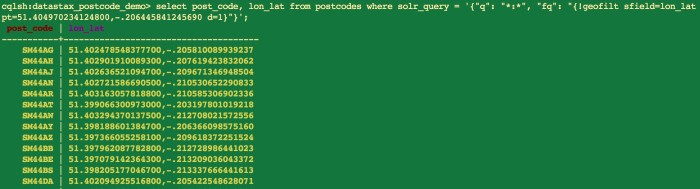

Now we can move into our geospatial queries and ask questions like "show me all postcodes within a km of 'SW20 9AQ'":

select * from postcodes where solr_query = '{"q": "*:*", "fq": "{!geofilt sfield=lon_lat pt=51.404970234124800,-.206445841245690 d=1}"}';

I can also connect to my data through Apache Spark using the Spark Cassandra Connector. I can use it in many ways, including the CassandraTable method, the CassandraConnector class and also through Spark SQL. To use with Spark you can use the following:

Using Cassandra Table

//Get data within a 1km radius

sc.cassandraTable("datastax_postcode_demo", "postcodes").select("post_code").where("solr_query='{\"q\": \"*:*\", \"fq\": \"{!geofilt sfield=lon_lat pt=51.404970234124800,-.206445841245690 d=1}\"}'").collect.foreach(println)

//Get data within a rectangle

sc.cassandraTable("datastax_postcode_demo", "postcodes").select("post_code").where("solr_query='{\"q\": \"*:*\", \"fq\": \"lon_lat:[51.2,-.2064458 TO 51.3,-.2015418]\"}'").collect.foreach(println)

Filtering with radius and box bounds

import com.datastax.spark.connector.cql.CassandraConnector

import scala.collection.JavaConversions._

//Get data within a 1km radius

CassandraConnector(sc.getConf).withSessionDo { session => session.execute("select * from datastax_postcode_demo.postcodes where solr_query='{\"q\": \"*:*\", \"fq\": \"{!geofilt sfield=lon_lat pt=51.404970234124800,-.206445841245690 d=1}\"}'")

}.all.foreach(println)

//Get data within a 1km bounded box

val rdd = CassandraConnector(sc.getConf).withSessionDo { session =>

session.execute("select post_code, lon_lat from datastax_postcode_demo.postcodes where solr_query='{\"q\": \"*:*\", \"fq\": \"{!bbox sfield=lon_lat pt=51.404970234124800,-.206445841245690 d=1}\"}'")

}.all.foreach(println)

Spark SQL

import org.apache.spark.sql.cassandra.CassandraSQLContext

//Get data within a 1km radius

val rdd = csc.sql("select post_code, lon_lat from datastax_postcode_demo.postcodes where solr_query='{\"q\": \"*:*\", \"fq\": \"{!geofilt sfield=lon_lat pt=51.404970234124800,-.206445841245690 d=1}\"}'") rdd.collect.foreach(println)

//Get data within a rectangle

val rdd = csc.sql("select post_code, lon_lat from datastax_postcode_demo.postcodes where solr_query='{\"q\": \"*:*\", \"fq\": \"lon_lat:[51.2,-.2064458 TO 51.3,-.2015418]\"}'") rdd.collect.foreach(println)

Want to learn more

Visit the DataStax Academy for tutorials, demos and self-paced training courses.