Introducing LangStream, an Open Source Project for Integrating Diverse Data Types in Production-Ready GenAI Applications

Generative AI (Gen AI) applications that build accurate context and avoid hallucinations must be fueled by fresh, real-time data from a variety of sources. But this poses a significant challenge to developers: how to quickly and confidently build production-ready applications using diverse data types: vectorized, non-vector, and event streams.

We recently announced a key part of solving this problem when we added vector search capabilities to DataStax Astra DB, which is designed for simultaneous search and update on distributed data and streaming workloads with ultra-low latency, as well as highly relevant vector results that eliminate redundancies.

Now, we've taken another important step with the introduction of LangStream, a new open source project that combines the best of event-based architectures with the latest Gen AI technologies. LangStream makes it easy to coordinate data from a variety of sources to enable high-quality prompts for large language models (LLMs)—making it far simpler to build scalable, production-ready, real-world AI applications on a broad range of data types.

(For a more technical dive into LangStream, read this blog post.)

Why LangStream?

If you're in the technology realm, you're well aware that Gen AI comprises the most groundbreaking technologies shaping our world -- and with it comes a host of new challenges. For one, how can developers quickly turn their Gen AI concepts into real-world applications? And not just any applications, but those that harness the power of event-driven architectures and can provide instantaneous, AI-driven responses to users in ways that seemed like science fiction a year or two ago.

LangStream connects streaming, event-based systems with the ability of Gen AI to produce new content from all kinds of data, both proprietary and public, accelerating the time it takes developers to bring AI applications to production. Whether you’re moving from a local setting to a production environment or integrating complex tasks like sending data to a vector database like Astra DB, it’s all streamlined.

LangStream + Astra DB: Seamlessly combine streaming data with Gen AI

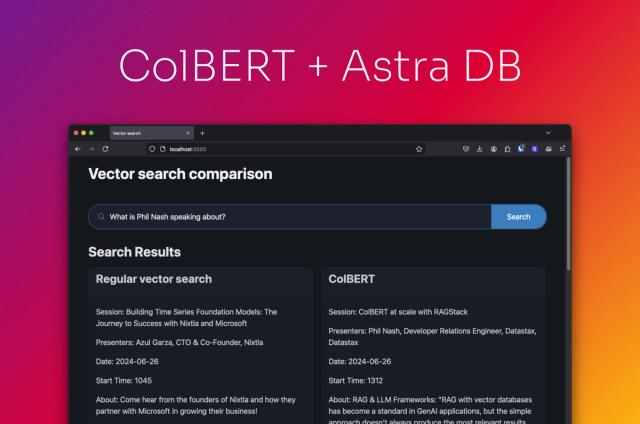

Central to any Gen AI application is the LLM. The efficacy of an LLM depends heavily on the prompt, which essentially is the contextual input that triggers the AI. This context often comes from data already in an organization’s systems, whether in caches, databases, or streaming platforms. That's where LangStream shines and vector databases like Astra DB become essential.

Imagine: You need to carry out a semantic search in a vector database like Astra DB. Before this, your data – no matter where it comes from – needs to be converted into vectors using an embedding model. With LangStream, this complicated process is boiled down to a simple YAML file defining a data pipeline.

All of these vectors are then stored in the Astra DB database, built on Apache Cassandra®. Astra DB offers developers a seamless way to extract relevant context from masses of data, ready to feed into the LLM. The integration of LangStream and Astra DB is particularly useful for constructing high-quality prompts, making Gen AI interactions more intuitive and effective.

The ease of effortlessly connecting and synchronizing all your data extends to populating the vector database, too. To illustrate, consider a scenario where you're consolidating real-time user behavior data with historical analytics. In a retail application, for example, you might want to associate the last few clicks on products in the session with historical preferences like fit and price range preference for this user to provide relevant actionable recommendations in this session.

With LangStream's capabilities in Astra DB, this once complex operation is just a few clicks away. (See an example of this integration in action by watching this demonstration, “Build a Streaming AI Agent Using OpenAI, Vercel, and Astra DB in 10 Lines of Code.”)

With LangStream and the Astra DB vector database, application developers are now equipped with:

- Rapid prototyping Quickly turn Gen AI concepts into prototypes, and then into production-grade applications.

- Event-driven architectures Seamlessly combine Gen AI with streaming data, making applications more dynamic.

- Open-Source Flexibility Dive into LangStream's open-source ecosystem, with the ability to customize using Python.

- Easy integration with popular services Use built-in features to integrate with a range of services, including OpenAI, Hugging Face, and Google Vertex AI.

What will you build?

We believe that a database should be more than just a storage solution. It should be a platform that enables developers to create, innovate, and scale. By incorporating vector databases like Astra DB into LangStream agents, we're ensuring that application developers have the tools they need to easily bridge various data types with vector databases. This not only streamlines data operations but also paves the way for richer, more dynamic applications.

Be part of this transformative journey. Check out LangStream today.