Getting Started with DataStax Astra DB and Amazon Bedrock

DataStax Astra DB is a real-time vector database that can scale to billions of vectors. Amazon Bedrock is a managed service that makes foundation models available via a single API so you can easily work with and switch between multiple different foundation models. Together, Astra DB and AWS Bedrock make it much easier for you as a developer to get more accurate, secure, and compliant generative AI apps into production quickly.

In this post, we'll cover the basics on creating a simple question and answer system using an Astra DB vector database, embeddings, and foundation models from Amazon Bedrock. The data will comprise the lines from a single act of Shakespeare’s “Romeo and Juliet,” and you'll be able to ask questions and see how the model responds.

A side note: this is actually a modified play, "Romeo and Astra," where “Juliet” has been changed to “Astra” throughout the play. This is necessary because the model was trained on a huge amount of data from the internet, and it already knows how Juliet died. We will be referring to her as Astra in this post and in the data to make sure that the answers are coming from the documents explicitly given to the system.

What is Amazon Bedrock?

Amazon Bedrock is a managed service that makes foundation models available via a single API to facilitate working with and switching between multiple different foundation models. This example uses Amazon Titan Embeddings (amazon.titan-embed-text-v1) and Anthropic Claude 2 model (anthropic.claude-v2) for the LLM. This example could easily be converted to use one of the other foundation models such as; Amazon Titan, Meta Llama 2, or models from AI21 Labs.

What is Astra DB?

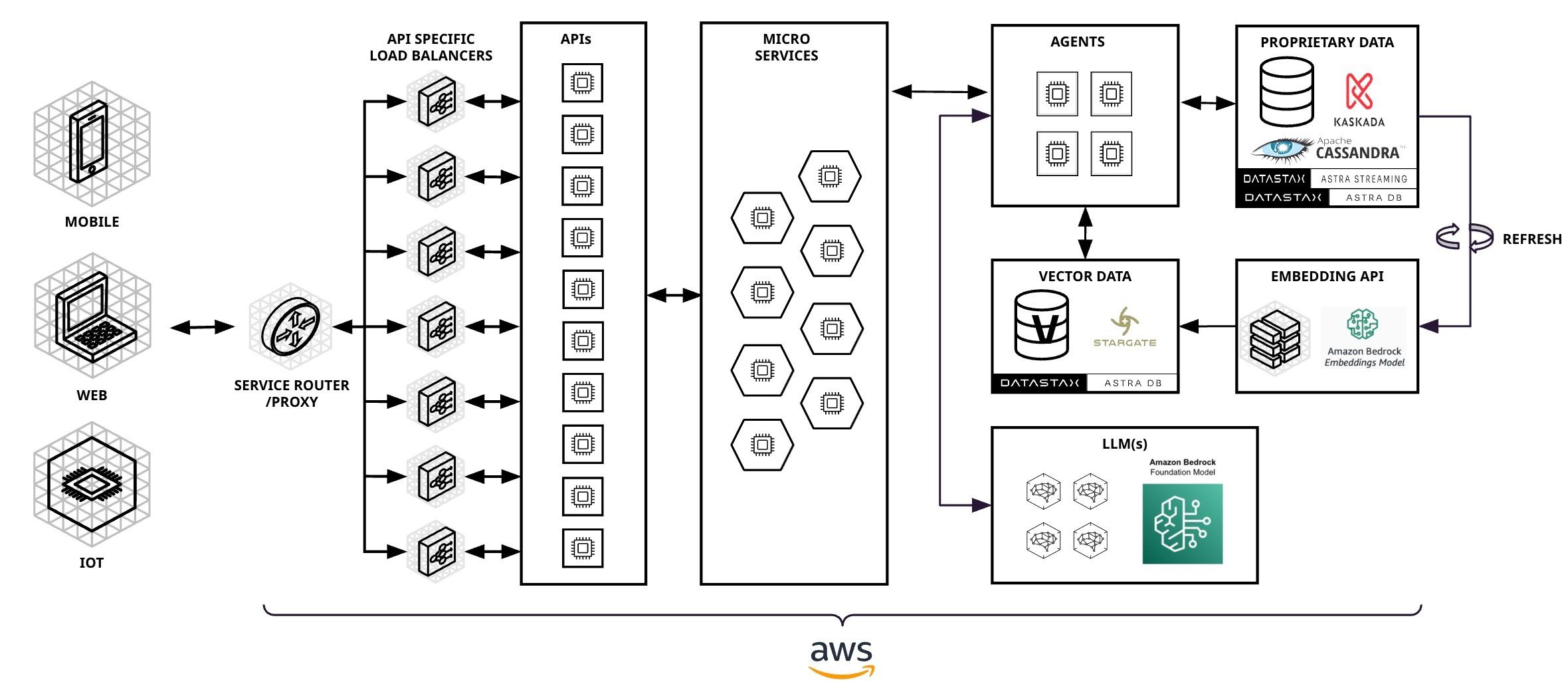

Astra DB is a real-time vector database that can scale to billions of vectors and embeddings; as such, it’s a critical component in a generative AI application architecture. Real-time data reads, writes, and availability are critical to prevent AI hallucinations. By combining Astra DB with Amazon Bedrock, you have the core components needed to build applications that use retrieval augmented generation (RAG), FLARE, and model fine-tuning.

Astra DB deploys to AWS natively so your entire application stack can live on the same consistent infrastructure and benefit from important security standards like PCI, SOC2, HIPAA, and ISO 27001. Astra DB also integrates with AWS PrivateLink, which allows private connectivity between LLMs and your Amazon Virtual Private Cloud (VPC) without exposing your traffic to the internet. The example notebook uses LangChain so the code should be easy to follow and it’ll be simple to add Astra DB and Amazon Bedrock to your existing applications.

Here are the steps!

This example is available as a notebook on Colab or you can upload the notebook to Amazon SageMaker Studio (here’s some information on getting started with SageMaker Studio).

This requires an Astra DB vector database and your security credentials. If you don't already have one, go through the prerequisites. You will also need to get temporary access credentials from AWS in order to authenticate your Amazon Bedrock commands.

In the example, we will run through the following steps:

- Set up your Python environment

- Import the needed libraries

- Setup Astra DB

- Setup AWS credentials

- Set up Amazon Bedrock objects

- Create a vector store in Astra DB

- Populate the database (this can take a few minutes)

- Ask questions about the data set

As long as you have your Astra DB credentials along with the AWS temporary credentials, this notebook should be a great starting point for exploring Astra DB vector functionality with Amazon Bedrock utilities. At the end of this post, we’ll give you some details about what you're doing in the notebook, but first here’s an overview.

How does a vector database work?

If you’re familiar with how vector databases work, you can skip this section. If not, here's a simple overview of how they work:

- The vector store is created, and it has a special field where embeddings will be put for each item in a table. A vector field can be set up for many different dimensionalities. For example, you might choose to use Amazon Titan Embeddings amazon.titan-embed-text-v1, which supports 1536 dimensions.

- The data is broken down, or chunked, into smaller pieces and each piece is given an embedding before loading the data into the database. Now the vector database is ready to tell you which of the items in the database are most similar to your query.

- The query is given an embedding, and then the vector database determines which items in the database are most similar to that item. There are various algorithms for this kind of search, but the most popular is ANN, or approximate nearest neighbor.

- At this point what you have is a list of documents which are similar to the document you provided. For many cases, this is sufficient, but for most cases, you will want a large language model (LLM) to analyze the returned documents so it can use them in predictions, recommendations, or in this case, answers to questions.

- After creating a prompt for the LLM (including the documents returned by the database), you can run your query through the LLM and get an answer in natural language. How you frame the prompt is very important; it's also important to remember that different LLMs are good at different tasks, and a prompt that works for one model might not work for others.

So, in the case of this example:

- General environment system setup is done

- All of the lines of Act 5, Scene 3 of Romeo and Juliet are processed

- A line is created with the Act and Scene and character

- That line is fed to the embedding engine (bedrock_embeddings)

- The line and its embedding are stored in the vector database

- A question is posed ("How did Astra die?")

- The question is fed to the embedding engine (bedrock_embeddings)

- That embedding is given to the vector database, asking for similar items

- The vector database returns some documents

- A prompt is created which includes the documents from the vector database

- The LLM is given the prompt and generates the answer

Notebook notes

Here are a few notes on what you'll be doing in the notebook. We encourage you to run the Jupyter Notebook in Amazon SageMaker Studio, or you can just work with the python code sample locally or in Collab.

- Set up your Python environment and import libraries In this section, the needed libraries are loaded into the environment. If you want to run this example locally in Python, the code can be found here, with comments indicating what you need to do to make it work.

- Input your Astra credentials Follow the instructions to get your token, your keyspace and your database id. When this step is done your system will be ready to make calls on your behalf to Astra DB, and a database session will have been established for you.

- AWS credential setup You'll need to retrieve your temporary credentials from your AWS account in order to fill in these credentials.

- Set up Amazon Bedrock objects This creates an Amazon Bedrock object called “bedrock” to be used later in the script (for the retrieval section) with Amazon Titan Embeddings.

- Create a vector store in Astra DB Using the Amazon Titan Embeddings and the database session, create a table that’s ready for vector search. You don't need to give it information about how to set up the table, as it has a set template it uses for creating these databases.

- Populate the database This section retrieves the contents of Scene 5, Act 3 of “Romeo and Astra,” and then populates the database with the lines of the play. Note that this can take a few minutes, but there are only 321 quotes so it's usually done fairly quickly. Also note that because we're putting all of this into the database, you can ask many different questions about what happened in that tragic act.

- Ask a question (and create a prompt) In this section you'll be setting the question and creating the prompt for the LLM to use to give you an answer. This is kind of fun, because you can be as specific as you want, or ask a more general question. The example I have ("How did Astra die?") is a pretty basic one. But who visited the tomb? Why was Romeo banished? Who killed Tybalt? Note that some of these happened in different acts, they're just here to give you some ideas about what questions to ask. Scroll back up to where the database was being populated, and see what questions you want to try out.

- Create a retriever This looks simple because it is. Using LangChain means that these tasks, which used to be very complex, are easy to understand. It's also printing out for you the documents which were retrieved, so you can see what the LLM is working with for the answer.

- Get the answer Using the Claude model, the engine processes the prompt and the content from the retrieved documents, and provides an answer to the question. Note that depending on the configuration of the request, you could get a different answer to the same questions.

Conclusion

Hopefully you now know how to set up a vector store with Astra DB. You populated that vector store with the lines from a page, using the Amazon Titan Embeddings. Finally, you queried that vector store to get specific lines from the page matching a query and used the Anthropic Claude 2 foundation model from Amazon Bedrock to answer your query using natural language.

While this may sound complex, vector search on Astra DB, when coupled with Amazon Bedrock, takes care of all of this for you with a fully integrated solution that provides all of the pieces you need for contextual data: from the nervous system built on data pipelines, to embeddings, all the way to core memory storage and retrieval, access, and processing—in an easy-to-use AWS cloud platform.

You’re probably already thinking about how to scale your generative AI applications into production. Consider these benefits of Astra DB on AWS. Astra lets you make your large language models smarter by adding your own unique data. When you pair DataStax's trusted and compliant platform with security features from AWS like AWS PrivateLink, you can create smart, secure, and production-scale AI powered apps using both Astra and Amazon Bedrock without worrying about data safety.

Try Astra for free today; it's also available in AWS Marketplace.